Visual Slam-Based Mapping and Localization for Aerial Images

by Onur Eker 1 ![]() , Hakan Cevikalp 2,*

, Hakan Cevikalp 2,* ![]() and Hasan Saribas 3

and Hasan Saribas 3 ![]()

1 Havelsan, Ankara, Turkey

2 Eskisehir Osmangazi University, Machine Learning and Computer Vision Laboratory, Eskisehir, Turkey

3 Eskisehir Technical University, Faculty of Aeronautics and Astronautics, Eskisehir, Turkey

* Author to whom correspondence should be addressed.

Journal of Engineering Research and Sciences, Volume 1, Issue 1, Page # 01-09, 2022; DOI: 10.55708/js0101001

Keywords: SLAM, Mapping, Object detection, Orthomosaic, Aerial imaging

Received: 27 December 2022, Revised: 03 February 2022, Accepted: 06 February 2022, Published Online: 24 February 2022

AMA Style

Eker O, Cevikalp H, Saribas H., Visual Slam-Based Mapping and Localization for Aerial Images, Journal of Engineering Research and Sciences. 2022;1(1):1-9. DOI: 10.55708/js0101001

Chicago/Turabian Style

O. Eker, H. Cevikalp, H. Saribas “Visual Slam-Based Mapping and Localization for Aerial Images”, Journal of Engineering Research and Sciences, vol. 1, no. 1, pp. 1-9 (2022). DOI: 10.55708/js0101001

IEEE Style

O. Eker, H. Cevikalp, H. Saribas “Visual Slam-Based Mapping and Localization for Aerial Images”, Journal of Engineering Research and Sciences, vol. 1, no. 1, pp. 1-9 (2022). DOI: 10.55708/js0101001

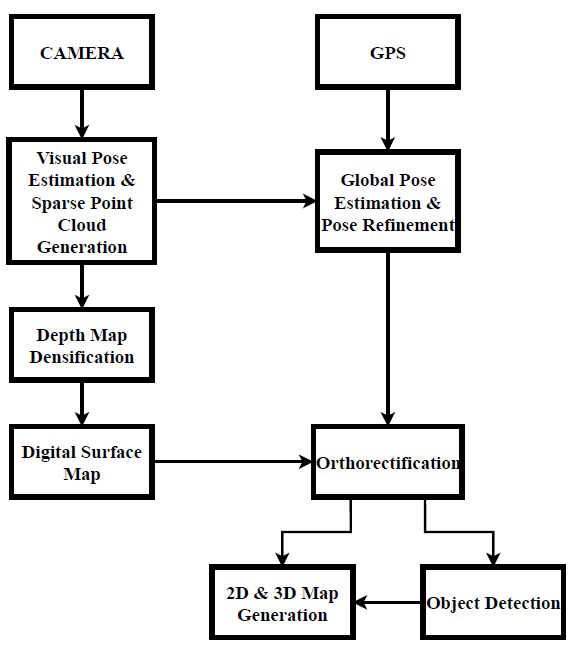

Fast and accurate observation of an area in disaster scenarios such as earthquake, flood and avalanche is crucial for first aid teams. Digital surface models, orthomosaics and object detection algorithms can play an important role for rapid decision making and response in such scenarios. In recent years, Unmanned Aerial Vehicles (UAVs) have become increasingly popular because of their ability to perform different tasks at lower costs. A real-time orthomosaic generated by using UAVs can be helpful for various tasks where both speed and efficiency are required. An orthomosaic provides an overview of the area to be observed, and helps the operator to find the regions of interest. Then, object detection algorithms help to identify the desired objects in those regions. In this study, a monocular SLAM based system, which combines the camera and GPS data of the UAV, has been developed for mapping the observed environment in real-time. A deep learning based state-of-the-art object detection method is adapted to the system in order to detect objects in real time and acquire their global positions. The performance of the developed method is evaluated in both single and multiple UAVs scenarios.

1. Introduction

Classical 2D image stitching methods that performreal-time mapping from monocular camera in aerial images are built based on feature extraction and matching in consecutive frames [1]. These methods are mainly based on the calculation of homography, which defines the motion between two image planes. Since the calculations are limited to a planar surface in these methods, the 3D structure of the observed environment cannot be obtained. To solve this problem, authors in [2] used the Kanade-Lucas-Tomasi feature tracker, and fused the UAV’s IMU (Inertial Measurement Unit) and GPS (Global Positioning System) sensor data. Dense point cloud and digital surface model were generated with the 3D camera position obtained by sensor fusion techniques.

Structure from Motion methods can also be used in orthomosaic generation. There are several algorithms that use Structure from Motion methods, such as OpenMVG [3], PhotoScan [4]. These methods generally follow feature extraction and matching, image alignment and bundle adjustment algorithm, sparse point cloud generation, dense point cloud and mesh generation, orthomosaic stages. In order to generate the final orthomosaic image with Structure from Motion methods, all images to be used in the mapping process must be prepared in advance and the mapping process takes a long time. Therefore, Structure from Motion based methods are not suitable for real-time and incremental usage.

Aside from Structure from Motion algorithms, SLAM (Simultaneous Localization and Mapping) methods are used for real-time 3D mapping and localization. Monocular camera based SLAM applications have recently become one of the most studied topics in robotics and computer vision. SLAM is considered a key technique for navigation and mapping in unknown environments. Monocular SLAM algorithms are basically categorized as feature-based and direct methods. Feature-based SLAM algorithms detect features in frames and track them in consecutive frames. Then, they use the resulting features to estimate the camera pose and generate the point cloud map [5, 6, 7]. On the other hand, direct methods directly use pixel intensities of the images instead of extracting features from the images. Therefore, direct methods tend to create a much more detailed map of the observed environment since they use more information coming from images [8, 9]. However, in case of illumination changes and sudden camera movements, feature-based methods are more robust and can estimate camera pose with higher accuracy compared to direct methods. There are also semi-direct approaches such as SVO [10] that compute strong gradient pixels and achieve high speed. In [11], the authors proposed a dense monocular reconstruction method that integrates SVO as camera pose estimation module. In [12], authors use a feature-based SLAM method and the GPS data of the UAV to generate 2D orthomosaic maps from aerial images. In this study, to generate 2D and 3D orthomosaics, ORB-SLAM [6] which is a very fast and robust feature-based monocular SLAM method is used for camera pose estimation and spare point cloud generation. In [13], author propose a monocular SLAM-based method to generate 2D and 3D orthomosaic images. Similar to our study, the method uses ORB-SLAM as the monocular SLAM method. In addition, in their paper, values of the cells on the overlapping regions were determined by using a probabilistic approach at the orthomosaic stage. As opposed to this method, the values of the cells are determined according to the minimum elevation angle similar to [2] in our proposed method. Moreover, in our method, a deep learning based object detection method which is trained on a novel dataset was integrated to the mapping pipeline to detect objects on the rectified images. By marking these detections on 2D and 3D maps, the real world positions of the detected objects can be calculated, and these positions can be used to create a better map. Another method which is similar to ours is GPS-SLAM [14]. GPS-SLAM is expected to perform well on scarce datasets where FPS (frames per second) is 1 or below. The method augments ORB-SLAM’s pose estimation by fusing GPS and inertial data. In addition to this, the authors increase the number of features that are extracted by ORB-SLAM, which highly affects and reduces the computation speed. The method works robust and more accurately compared to the ORB-SLAM on scarce datasets where FPS is 1 or less. A drawback is that as FPS increases (above 1 FPS) GPS-SLAM fails to track and estimate the camera pose which prevents the usage of the method in the pose estimation stage of an end-to-end mapping and localization pipeline. Unlike GPS-SLAM, we can achieve more robust camera pose estimation compared to ORB-SLAM at higher FPS as demonstrated in our experiments.

- Brown, D. G. Lowe, “Automatic panoramic image stitching using invari- ant features”, International Journal of Computer Vision, vol. 74, no. 1, pp. 59–73, 2007.

- T. Hinzmann, L. Scho¨nberger, M. Pollefeys, R. Siegwart, “Mapping on the fly: Real-time 3d dense reconstruction, digital surface map and incremental orthomosaic generation for unmanned aerial vehicles”, “Field and Service Robotics”, pp. 383–396, Springer, 2018.

- Moulon, P. Monasse, R. Perrot, R. Marlet, “Openmvg: Open multiple view geometry”, “International Workshop on Reproducible Research in Pattern Recognition”, pp. 60–74, Springer, 2016.

- Verhoeven, “Taking computer vision aloft–archaeological three- dimensional reconstructions from aerial photographs with photoscan”, Ar- chaeological prospection, vol. 18, no. 1, pp. 67–73, 2011.

- Klein, D. Murray, “Parallel tracking and mapping for small ar workspaces”, “Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality”, pp. 1–10, IEEE Computer Society, 2007.

- Mur-Artal, J. M. M. Montiel, J. D. Tardos, “Orb-slam: a versatile and accurate monocular slam system”, IEEE Transactions on Robotics, vol. 31, no. 5, pp. 1147–1163, 2015.

- Campos, R. Elvira, J. J. G. Rodr´ıguez, J. M. Montiel, J. D. Tardo´s, “Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam”, IEEE Transactions on Robotics, 2021.

- Engel, T. Scho¨ ps, D. Cremers, “Lsd-slam: Large-scale direct monocular slam”, “European Conference on Computer Vision”, pp. 834–849, Springer, 2014.

- Pascoe, W. Maddern, M. Tanner, P. Pinie´s, P. Newman, “Nid-slam: Ro- bust monocular slam using normalised information distance”, “Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition”, pp. 1435–1444, 2017.

- Forster, M. Pizzoli, D. Scaramuzza, “Svo: Fast semi-direct monocular visual odometry”, “2014 IEEE International Conference on Robotics and Automation (ICRA)”, pp. 15–22, IEEE, 2014.

- Pizzoli, C. Forster, D. Scaramuzza, “Remode: Probabilistic, monocular dense reconstruction in real time”, “2014 IEEE International Conference on Robotics and Automation (ICRA)”, pp. 2609–2616, IEEE, 2014.

- Bu, Y. Zhao, G. Wan, Z. Liu, “Map2dfusion: real-time incremental uav image mosaicing based on monocular slam”, “2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)”, pp. 4564–4571, IEEE, 2016.

- Kern, M. Bobbe, Y. Khedar, U. Bestmann, “Openrealm: Real-time map- ping for unmanned aerial vehicles”, “2020 International Conference on Unmanned Aircraft Systems (ICUAS)”, pp. 902–911, IEEE, 2020.

- Kiss-Ille´s, C. Barrado, E. Salam´ı, “Gps-slam: an augmentation of the orb-slam algorithm”, Sensors, vol. 19, no. 22, p. 4973, 2019.

- Redmon, A. Farhadi, “Yolov3: An incremental improvement”, arXiv preprint arXiv:1804.02767, 2018.

- T.-Y. Lin, P. Goyal, Girshick, K. He, P. Dolla´r, “Focal loss for dense object detection”, “Proceedings of the IEEE International Conference on Computer Vision”, pp. 2980–2988, 2017.

- Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, A. C. Berg, “Ssd: Single shot multibox detector”, “European conference on computer vision”, pp. 21–37, Springer, 2016.

- Ren, K. He, R. Girshick, J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks”, “Advances in Neural Information Processing Systems”, pp. 91–99, 2015.

- Tian, C. Shen, H. Chen, T. He, “Fcos: A simple and strong anchor- free object detector”, IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- Zhu, F. Chen, Z. Shen, M. Savvides, “Soft anchor-point object detection”, “Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part IX 16”, pp. 91–107, Springer, 2020.

- Umeyama, “Least-squares estimation of transformation parameters be- tween two point patterns”, IEEE Transactions on Pattern Analysis and Machine Intelligence, , no. 4, pp. 376–380, 1991.

- Bertalmio, A. L. Bertozzi, G. Sapiro, “Navier-stokes, fluid dynamics, and image and video inpainting”, “IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR)”, vol. 1, pp. I–I, IEEE, 2001.

- Fankhauser, M. Hutter, “A universal grid map library: Implementation and use case for rough terrain navigation”, “Robot Operating System (ROS)”, pp. 99–120, Springer, 2016.

- L. Bentley, “Multidimensional binary search trees used for associative searching”, Communications of the ACM, vol. 18, no. 9, pp. 509–517, 1975.

- Redmon, A. Farhadi, “Yolo9000: better, faster, stronger”, “IEEE Society Conference on Computer Vision and Pattern Recognition”, pp. 7263–7271, 2017.

- He, X. Zhang, S. Ren, J. Sun, “Deep residual learning for image recognition”, “IEEE Society Conference on Computer Vision and Pattern Recognition”, pp. 770–778, 2016.

- Kummerle, B. Steder, C. Dornhege, M. Ruhnke, G. Grisetti, C. Stachniss, Kleiner, “On measuring the accuracy of slam algorithms”, Autonomous, no. 4, p. 387, 2009.